In March 2022, the New Jersey Attorney General asked the public an important question: how should law enforcement use of facial recognition technology be regulated? In response, the ACLU of New Jersey urged the Attorney General to address a more fundamental concern: should law enforcement be allowed to use facial recognition at all? Our answer is a resounding no, and we called for a total ban on the use of facial recognition technology by law enforcement.

The reasons for that go to the heart of why we started our Automated Injustice project. Facial recognition technology, like many kinds of artificial intelligence or automated decision systems used by the government, can worsen racial inequity, limit our civil rights and liberties – like the right to privacy and freedom of speech – and deprive people of fundamental fairness. If we want to combat those harms in the Garden State, facial recognition cannot escape our scrutiny, especially as New Jersey law enforcement continue to use the technology despite its dangers.

When we discuss the use of facial recognition by law enforcement, we are often referring to a task called face identification: given a still photo from the scene of a crime, like one taken from a surveillance camera, police match a face in the photo to a known identity in an existing police database, such as a digital collection of mug shots. Facial recognition technology automates that identification step through artificial intelligence.

This task might sound basic enough, but to understand how the use of facial recognition technology exacerbates racist policing and threatens our civil liberties, let’s look at what happens when facial recognition fails and then consider the consequences if it were to work too well.

What happens when facial recognition goes wrong

When facial recognition fails, errors lead to wrongful arrest and incarceration, with people of color bearing the brunt.

Identification as a potential suspect can directly result in arrest and incarceration. When facial recognition labels an unknown face with a mistaken identity (a false positive), that can result in wrongful arrest and wrongful incarceration.

"Facial recognition is far from an exact science. Human analysts can place undue trust in facial recognition technology, believing incorrect matches to be true even if their own judgment would be different."

This problem is not hypothetical. New Jerseyans have already been arrested due to facial recognition gone wrong. A man from Paterson named Nijeer Parks spent ten days in jail for shoplifting after the police misidentified him using facial recognition, even though he was 30 miles away at the time of the incident. In that case, the police outsourced the facial recognition search by asking New York law enforcement to perform the search using their system. This unregulated practice makes oversight and accountability even more challenging.

How can these blatant mistakes happen so easily? Systems and algorithms like facial recognition still require humans to operate them and interpret their results, leading to mistakes and misuse. Facial recognition is far from an exact science. Human analysts can place undue trust in facial recognition technology, believing incorrect matches to be true even if their own judgment would be different. The result is an error-prone investigatory process that can lead to a serious loss of liberty.

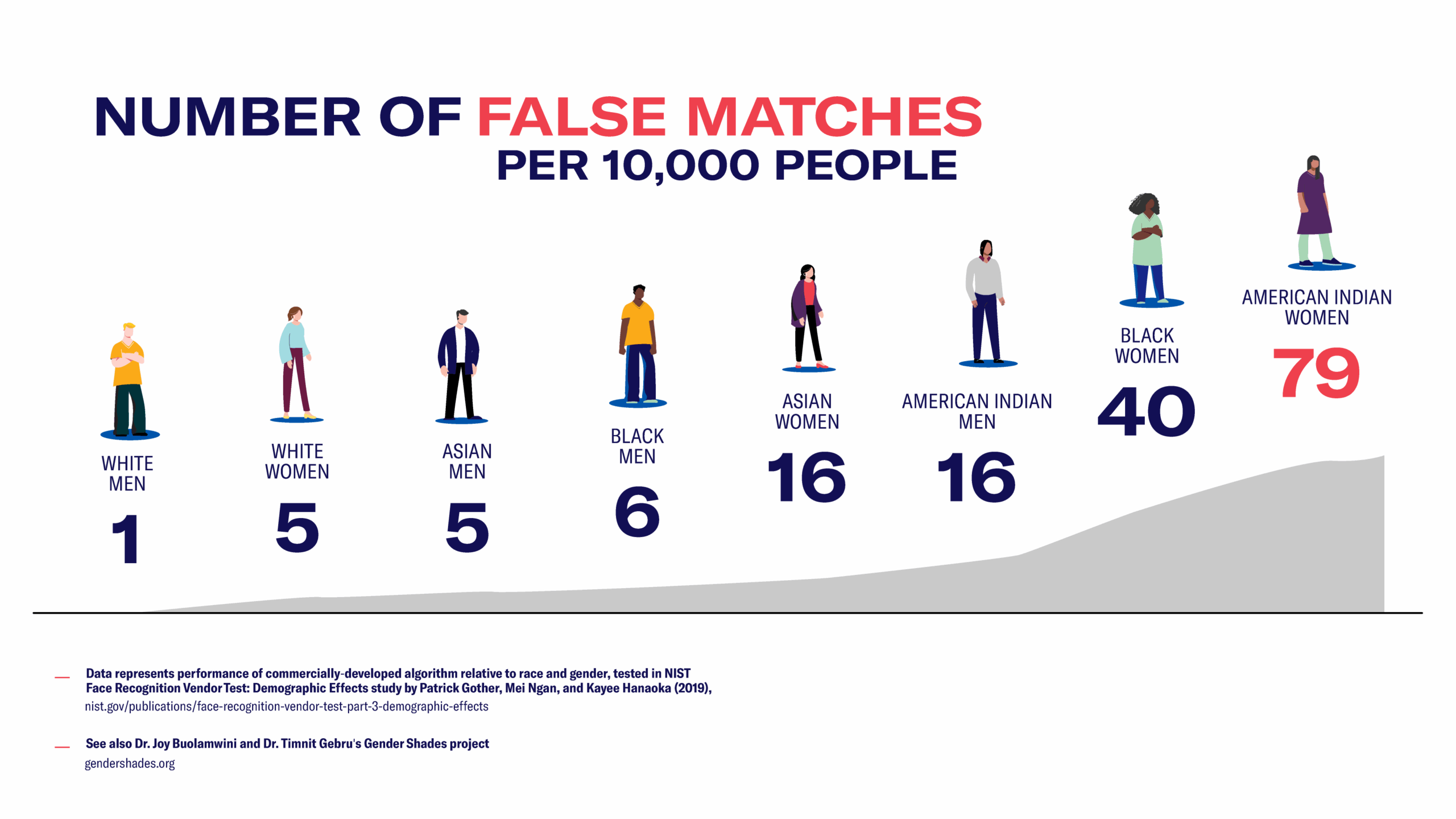

Compounding those problems, facial recognition technologies are frequently more error-prone when attempting to identify the faces of people of color – meaning people of color are more likely to face wrongful police scrutiny. The disparity in performance can be stark: one commercial facial recognition algorithm tested by the government was 40 times more error-prone for Black women and 79 times more error-prone for Indigenous women as for white men.1

What if facial recognition had perfect accuracy?

Even if facial recognition identified people with perfect accuracy, it would still be unfit for law enforcement use in a free society.

Our rights and liberties would fare no better in a world where facial recognition technologies were 100 percent accurate. Even if facial recognition were equally accurate for white people as for Black people, policing and surveillance tools are still disproportionately targeted toward communities of color, resulting in disparate harms for those communities.

"One commercial facial recognition algorithm tested by the government was 40 times more error-prone for Black women and 79 times more error-prone for Indigenous women as for white men."

And on a fundamental level, the presence of surveillance alters people’s relationship with their neighborhoods and communities. The broad use of facial recognition can have a chilling effect on free speech and assembly if people fear losing their anonymity when participating in political activities protected under the First Amendment. The threat of government surveillance and scrutiny can even discourage what should be basic everyday activities, like going to a health clinic or a house of worship.

The necessity of a total ban on facial recognition by law enforcement

The only safe policy to govern facial recognition is a total ban on its use by law enforcement.

Already, New Jerseyans are pushing back against this invasive technology --- in 2021, Teaneck became the first town in New Jersey to ban the police from using facial recognition, in large part because of concerns with the technology’s effect on civil rights. And in January 2020, the New Jersey Attorney General banned the use of the powerful and intrusive tool developed by Clearview AI. We urge New Jerseyans to ask local law enforcement about their use of facial recognition and demand increased oversight of this unsafe, unreliable technology.

Law enforcement is not the only context in which facial recognition is used by the government – everything from school safety to public benefits could be affected by the adoption of facial recognition. It’s important that we remain vigilant about the use of facial recognition and continue to call for regulation and oversight to defend fundamental civil rights and liberties.

The ACLU-NJ began the Automated Injustice project to investigate the use of artificial intelligence, automated decision-making systems, and other sorts of algorithms by the New Jersey government. We invite you to share your story of how government use of automated systems has affected your life.